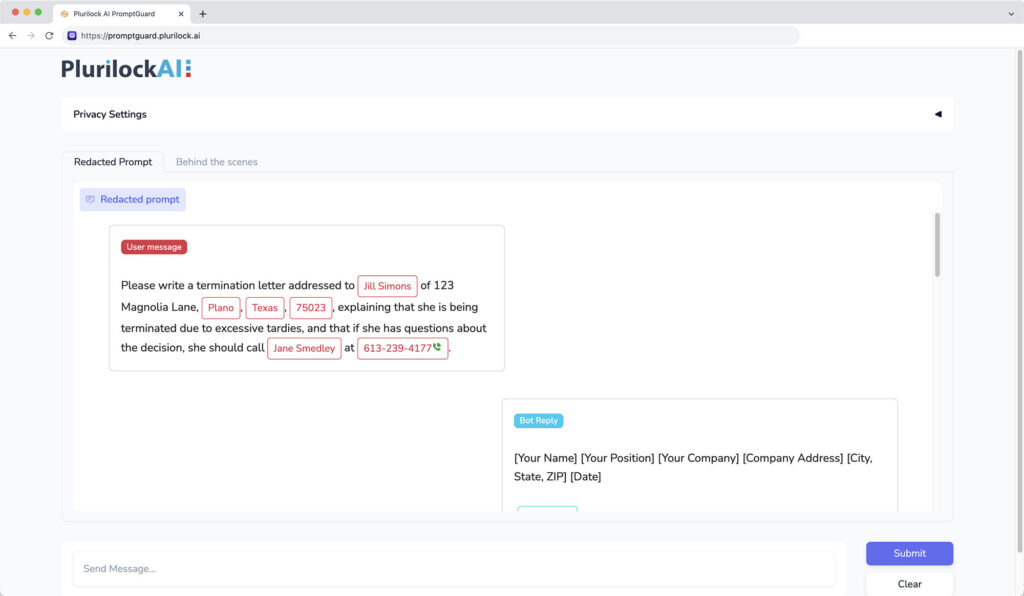

Plurilock AI PromptGuard automatically detects and redacts confidential and sensitive information in AI prompts, providing guardrails for employee AI use.

2510 W. 237th Street

Suite 202

Torrance, CA 90505

+1 888 282 0696

info@aurorait.com

Copyright © Aurora Systems Consulting, Inc. All rights reserved.